- Joined

- Apr 12, 2021

- Messages

- 903

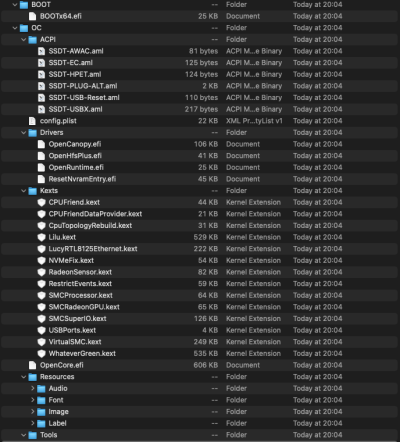

- Motherboard

- Asus z590 ROG Maximus XIII Hero

- CPU

- i9-11900K

- Graphics

- RX 6600 XT

- Mac

- Classic Mac

- Mobile Phone

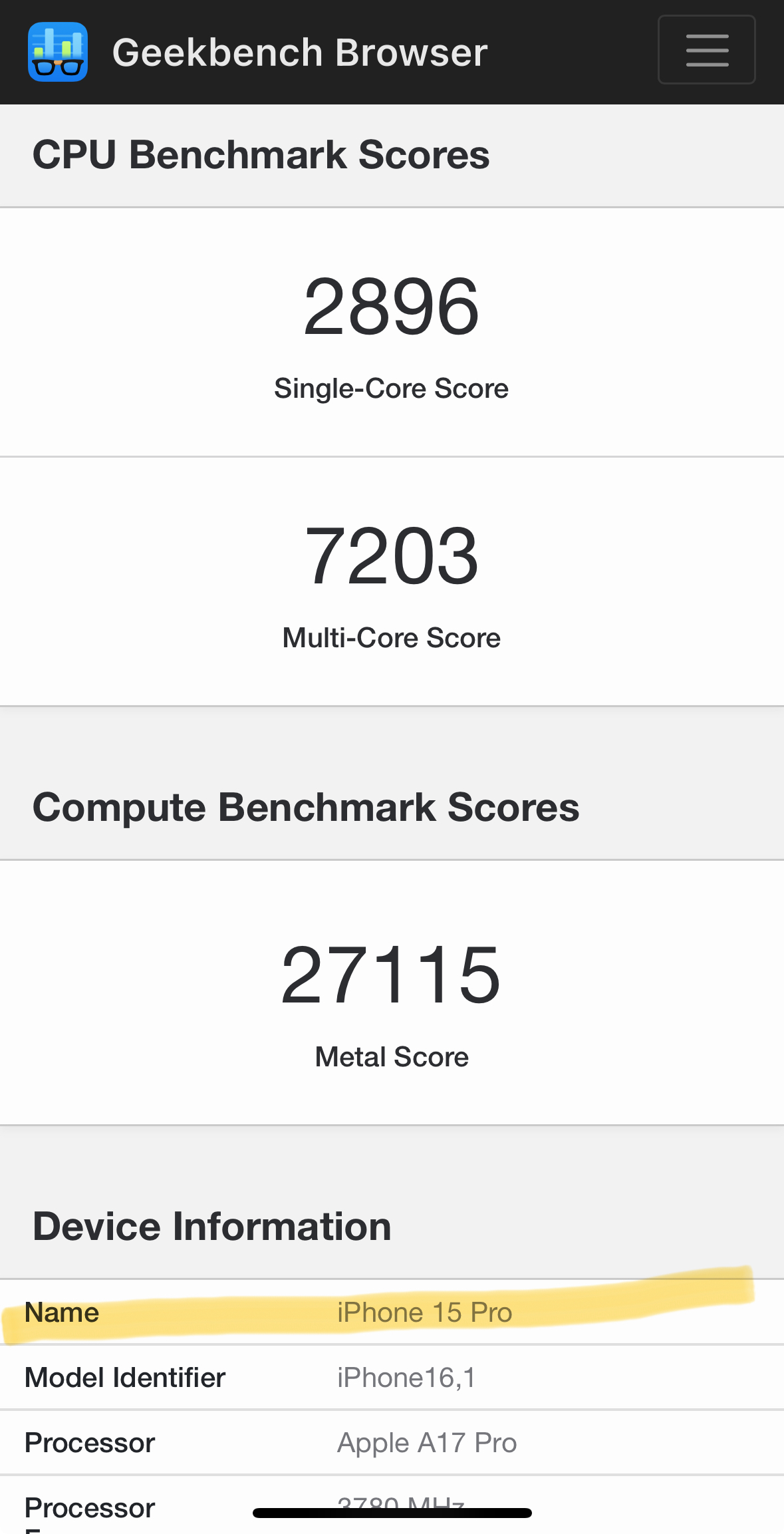

It is an Apple oriented benchmark. But we're talking Apple...

If benchmark performance is your measure, these numbers should have me scratching my head as to why the PC is no longer evolving.

I had suspected that with 12th gen Intel had accelerated some gaming desktop performance juju they might have held back to counter the buzz on AppleSi.

Now they're on a yearly generational release as AppleSi mobile can outpacing them. Are they just bumping major version numbers at this point.

I hope the 14th gen doesn't get yet another new socket.

I suggest wondering a bit more about all the support units employed by Apple, for which there are no handy benchmarks. Like why did the move the NVMe controller away from the flash? What's that neural engine really doing, etc.

I recently updated a 2012 Macbook Pro to Ventura. It works and is usable, but video playback is no longer accelerated, and background tasks like media analysis (photo scanning) cause high CPU usage, fans revving, battery drains rapidly. It's obvious why it's no longer supported: it's too inefficient when doing ordinary things an iPhone does on battery all day.

So how does new Intel or AMD kit figure into Apple's bag of tricks for total package? We should assume Apple is no longer optimizing for the accelerator features on IA. But if all you have to grok is Geekbench, you might not notice.

In late 2023, with 14th gen, is it now oranges and Apples?

If benchmark performance is your measure, these numbers should have me scratching my head as to why the PC is no longer evolving.

I had suspected that with 12th gen Intel had accelerated some gaming desktop performance juju they might have held back to counter the buzz on AppleSi.

Now they're on a yearly generational release as AppleSi mobile can outpacing them. Are they just bumping major version numbers at this point.

I hope the 14th gen doesn't get yet another new socket.

I suggest wondering a bit more about all the support units employed by Apple, for which there are no handy benchmarks. Like why did the move the NVMe controller away from the flash? What's that neural engine really doing, etc.

I recently updated a 2012 Macbook Pro to Ventura. It works and is usable, but video playback is no longer accelerated, and background tasks like media analysis (photo scanning) cause high CPU usage, fans revving, battery drains rapidly. It's obvious why it's no longer supported: it's too inefficient when doing ordinary things an iPhone does on battery all day.

So how does new Intel or AMD kit figure into Apple's bag of tricks for total package? We should assume Apple is no longer optimizing for the accelerator features on IA. But if all you have to grok is Geekbench, you might not notice.

In late 2023, with 14th gen, is it now oranges and Apples?