- Joined

- Jun 14, 2015

- Messages

- 82

- Motherboard

- MSI Z170A Krait

- CPU

- i7-6700K

- Graphics

- Vega 64

- Mac

- Mobile Phone

A bit late for the party, but here's something I want to point out.

Even though you edited the bios and CUDA-Z reads the matched frequency, it is likely you still run on the same speed as before.

I've done many tests and here are some results:

980ti WaterForce stock 1318Mhz+7000Mhz(Actually runs on 1060Mhz+7000Mhz)

(And sorry no CUDA-Z screenshot but rated 1318Mhz, which matched the spec of this card)

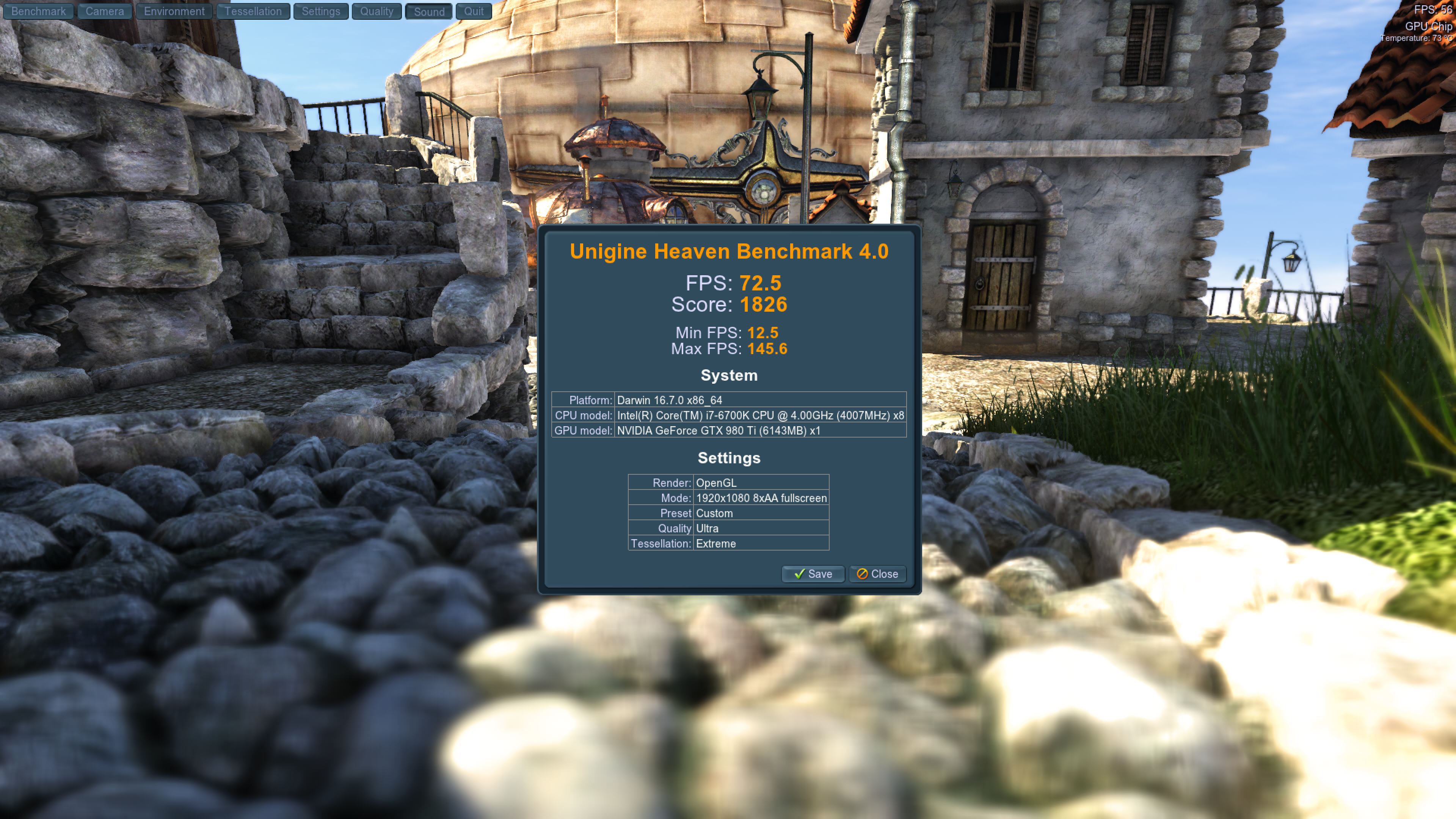

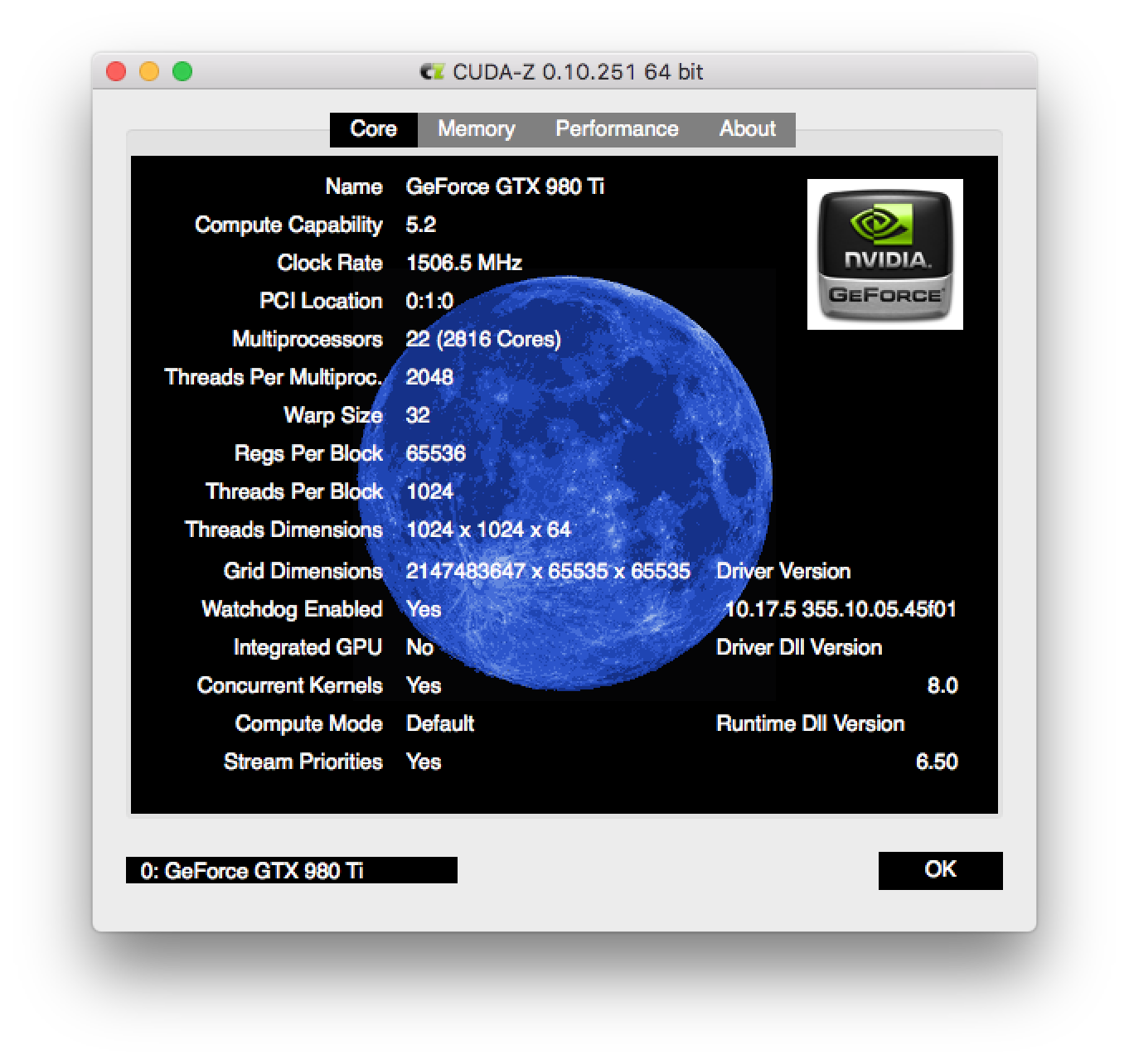

However, the result of custom vbios with 1506Mhz+7200Mhz:

I know there could be some margin of error but it doesn't make sense an overclocked vbios without overheating has no improvement and even worse score compared to a stock one.

And if you read the clock speed from HWMonitor, they are both running on 1060Mhz

So here's my conclusion, overclocking GPU by editing vbios for macOS is possible but you'll have to increase boost table a lot higher than the clock you want in order to achieve any clock gain over a "reference card"

What and why? From what I've experienced the way WebDriver works is it sees all vender cards as a reference card and with no GPU boost. Like our 980ti, the reference card has 1000Mhz base clock, boost up to 1075Mhz and +100Mhz max when GPU boost is available. So I think the number 1060Mhz from HWMonitor is correct, and the one from CUDA-Z is somehow...correct as well since it directly reads the data from vbios.

So what if we just increase the boost table as I just mentioned? Well, due to the base line difference between two systems(Win:Correct for card, Mac:Ref number), you will end up with either Win or Mac has unmatched voltage table so you can't get both of them running on the the clock you set at the same time. And that's the reason I ended up give up doing this unless some day NV or Apple fixed thsi issue.

Even though you edited the bios and CUDA-Z reads the matched frequency, it is likely you still run on the same speed as before.

I've done many tests and here are some results:

980ti WaterForce stock 1318Mhz+7000Mhz(Actually runs on 1060Mhz+7000Mhz)

(And sorry no CUDA-Z screenshot but rated 1318Mhz, which matched the spec of this card)

However, the result of custom vbios with 1506Mhz+7200Mhz:

I know there could be some margin of error but it doesn't make sense an overclocked vbios without overheating has no improvement and even worse score compared to a stock one.

And if you read the clock speed from HWMonitor, they are both running on 1060Mhz

So here's my conclusion, overclocking GPU by editing vbios for macOS is possible but you'll have to increase boost table a lot higher than the clock you want in order to achieve any clock gain over a "reference card"

What and why? From what I've experienced the way WebDriver works is it sees all vender cards as a reference card and with no GPU boost. Like our 980ti, the reference card has 1000Mhz base clock, boost up to 1075Mhz and +100Mhz max when GPU boost is available. So I think the number 1060Mhz from HWMonitor is correct, and the one from CUDA-Z is somehow...correct as well since it directly reads the data from vbios.

So what if we just increase the boost table as I just mentioned? Well, due to the base line difference between two systems(Win:Correct for card, Mac:Ref number), you will end up with either Win or Mac has unmatched voltage table so you can't get both of them running on the the clock you set at the same time. And that's the reason I ended up give up doing this unless some day NV or Apple fixed thsi issue.