Over at the thread...

Choosing a Compatible SSD For a Boot Drive

Introduction to NVMe Choices If you have any previous hackintosh experience, you'll know that a limited number of hardware components have native support in macOS. You can't buy just any graphics card and expect it to work. Only certain Broadcom models of Wifi/BT cards have native support etc...

www.tonymacx86.com

...a poster (OP) asked if spontaneous boot failures he was seeing, without any other system changes, might be related to an incompatible drive?

The OP question wasn't quite appropriate to that thread, but it's a fair question in context, as the the thread originator,

@trs96, offers views on Apple SSD design, hazards, trends in the consumer NVMe market, and helpful recommendations based on experience from the forums.

I took a fair amount of effort to assist OP, with many suggestions for troubleshooting, which I wrote in a way I hoped to be helpful to anyone with storage failures. Unfortunately for me, my effort was dumped down the memory hole when my posts were moderated out of existence due to being too far off-topic for that thread. That was fair, as I had only skimmed threads origin post, so my mistake. But I am not a moderator and I have no power to organize, threads, so I just offer help in context where I can. Frustrating.

In order to make my frustration productive, I re-read the origin post and recalled many other articles and experiences on NVMe SSDs and macOS.

I began to wonder about the idea of SSD "compatibility." Given the limited information available on drive operation and macOS, I think we can only discuss "incompatibility," as meaning applications of drives which haven't failed yet.

Rob: Just c'mon. What would it mean to you, that sentence - "I haven't seen Evil Dead II, yet"?

Barry: Well, to me it would mean you were a liar. You've seen it twice: once with Laura - Oops! - and once with me and Dick, remember? We had that conversation about the guy making Beretta shotgun ammunition offscreen in the 14th century.

I noticed some patterns on incompatibility, as follows:

Three main issues:

A—Power (excessive power consumption in laptops)

B—Kernel panics due to command timeouts

C—Trim stalls at boot (and in my experience also during installation and snapshot mgmt).

In rare cases there are two other problem areas:

D—Total device failure (rare but I've seen it myself repeatedly, and mentioned elsewhere)

E—Problems with external NVMe hanging

"A & B" have never affected me, so I have no comments. I assume NVMeFix is helpful. I don't happen to use NVMeFix any more on my build (SK-Hynix P41 Platinum boot drvie and WD SN750 backup) with no ill effects. But I was using it with my failed Rocket4s.

"D & E" have both affected me directly and gave rise to creating this thread. I suffered complete disaster of a pair of new Sabrent Rocket 4s under Big Sur when I was first assembling my build, and later with Monterey I had problems with 980 Pro hanging with used with a Type-C ext enclosure. The ext-hanging caused me to go on a long review and rebuild of my USB config, but I was never able to attribute the problem to USB mapping. My problems with ext NVMe hanging abated since a later Monterey release and Ventura has always worked fine.

This leaves "C", for which I went through a major headache when an otherwise perfectly fine 980 Pro I'd purchased to resolve my Sabrent Rocket 4 disasters started taking 15 minutes to boot. It acted up after a Samsung firmware update under Monterey in early 2022. Early in my build I also used a 970 EVO without problems on Big Sur, but I needed a bigger drive. That 970 EVO predates a Samsung controller change to the model. I now use it every day as a USB-attached scratch drive. I've also worked with an WD Black SN750 which has always be perfectly reliable and speedy, but after an upgrade from z590 Comet Lake to Rocket Lake, PCI4, I wanted to feel what the new bus might offer, so I choose the SK-Hynix P41 Platinum based on study of reviews. it was the top performing drive in 2022.

As an aside, my conclusion re PCIe4 storage is it certainly gives the best Mac response I have seen. But it doesn't offer a meaningful usability advantage over PCIe3. Moreover, if you have Comet Lake dialed in, there's no value to upgrading to Rocket Lake. Advances for 12th and 13th generation are more significant steps forward.

Today on the forums, I see Samsung Trim stalls "C" are the most commonly reported drive issue. But my point of view may be mostly conformation bias—I don't know.

@trs96 is single-handedly winning the forums over to WD, which seems fine. WD has been consistently reliable for years, while SSD prices have fallen by half or more since 2021, so it's hard to go wrong. But there's evidence that many other choices that can work.

At the same time, I see no solid evidence that there's any firm idea of "macOS compatibility". What we have is mostly anecdotal evidence of incompatibility (this which includes my own stories) that at best amount to "for some reason, certain drives don't work well—or don't work at all."

While there are various lists (linked below). I can't come up with any clear pattern other than Samsung+Trim ("C") stall issues.

It gets confusing because I have seen posts which claim that a certain generation of Phison controller is no good, but then seen other posts which contradict this saying Phison good, Samsung Phoenix bad, etc.

The Compatibility thread above is agreeably preoccupied with WD. But it's justifications are not satisfying. And while Apple is clearly going its own way on everything, I see Trim as the lynch-pin for incompatible hack NVMe.

Trim is topic aggravation for me because in the late 2000s I was a regular on the now bygone Macintouch, and everyone there including the site owner was going cattywumpas over Apple's lack of support for Trim on 3rd party drives, as if it were some great betrayal of the Mac user community. But even supposedly expert opinion could not coherently explain what Trim did, why it was needed, and any downside for not supporting it. The best article of that age was written for Ars Technica, but its conclusions begged the question: Trim is needed or it would. I did a lot searching, and the best information I could come up with was academic papers justifying Trim for wear levelling, given contrived scenarios.

Old timers wlll recall "Trim enablers" and Apple ultimately offering the trimforce command, with a big warning "USE AT OWN RISK." Today, I think it's truly ironic that after Mac cognoscenti finally got the Trim support they always wanted, it's biting a new generation of enthusiasts in the backside. And still nobody really knows what Trim is good for or when to use it.

Obviously, I've got a bee in my bonnet on this stuff, so the rest of this post will be a compendium of annotated links pertaining to hazard "C", its workarounds, and my opinions on the general issue of SSD Trim. I hope this will provide a further helpful point of view on the situation from a particular perspective, and I welcome all thoughts and criticisms.

Off to the races...!

NVMe TRIM SPEC

In NVMe, Trim support is handled by an optional feature called "Dataset Management (DSM)."

You will find this referred to in the OpenCore documentation for "SetApfsTrimTimeout" quirk.

(Please read below the fold.)

NVM-Express-NVM-Command-Set-Specification (PDF)

Pg 28, section 3.2.3 — NVM Commandset: Dataset Management.

Summary:

The Dataset Management command is used by the host to indicate attributes for ranges of logical blocks. This includes attributes like frequency that data is read or written, access size, and other information that may be used to optimize performance and reliability. This command is advisory; a compliant controller may choose to take no action based on information [commands] provided.

The DSM has an interesting range of attributes (opcodes):

Pg 31, Figure 42: Dataset Management – Context Attributes

Interestingly,

Ubuntu (and maybe other Linux) expose NVME DSM operations as a user command nvme-dsm. (see LINUX section at end)

OPENCORE TRIM QUIRK

OpenCore Configuration Guide (as of 0.9.1) — SetApfsTrimTimeout.

The APFS filesystem Spacemanager treats space as either used or free. This may be different in other filesystems where the areas can be marked as used, free, and unmapped. All free space is trimmed (unmapped/deallocated) at macOS startup.

Users with boot stalls can look for Spacemanger console boot log entries, accompanied by high drive activity for the duration of the stall. Try watching your mainboard's drive access LED.

The vocabulary is in the documentation is imprecis and doesn't exactly pertain to macOS. "Unmapped" and "deallocated" are figures of speech, not details about the design of Spaceman.

Moreover, the last sentence is a contradiction of the preceding sentence: the first says macOS has no state of "unmapped," it includes only "used or free," then it goes on to say all free space is "unmapped" at boot.

I read this as 'where other filesystems may track unmapped status, APFS does not.'

The trimming procedure for NVMe drives happens in LBA ranges due to the nature of the DSM command with up to 256 ranges per command. The more fragmented the [filesystem] is, the more commands are necessary to trim all free space. Depending on the SSD controller and the level of drive fragmentation, the trim procedure may take a lot of time, causing boot slowdown. The APFS driver explicitly ignores previously unmapped areas and repeatedly trims them on boot.

Meaning that Spaceman Trims everything marked as free without regard to previous status. The point about "fragmentation" seems incidental. It will never be explained how any particular number of DSM commands might lead to a slowdown.

To mitigate against boot slowdowns, the macOS driver introduced a timeout (9.999999 seconds) that stops the Trim operation...

So read the timeout option as "if it's not finished in 10 seconds" Triming is discontinued. The details are never explained.

On several controllers, such as Samsung..

...the time required for the drive to complete all the Spaceman DSM requests may far exceed the timeout.

Essentially, it means that the level of fragmentation is high, thus macOS will attempt to trim the same lower blocks that have previously been deallocated, but never have enough time to deallocate higher blocks.

Again, the terms "unmapped" and "deallocated" are used interchangeably in the documentation without a clear definition of either, so it's difficult to interpret these claims. In this OC documentation,"deallocate" is likely jargon. As the too-commonly mis-used term "garbage collection" in other literature.

In the literature, the drive operations are know as follows:

"

Unmap" is the SCSI command set term.

"

Trim" is the ATA command set term.

"

DSM" is the NVMe command set term.

"

Discard" is a Lunix term—I mention this because it's the only SW where we have visibility into the code.

I'm going to use

discard from here on to mean the OSes, inc macOS, invocation of drive IO commands.

With this clarification, we can move on to observe that we can't easily study the specific discards done by Spaceman, nor do we have details on Samsung's implementation of DSM functionality (which is optional), so there's no way to know why Spaceman stalls.

One great reason that Samsung is slow is because it's DSM commands are actually implemented, whereas on other drives they may not be! But there are many possibilities.

APFS Fragmentation may or may not be relevant. This is never discussed.

As Spaceman doesn't track discard status, there's no basis for any idea of Spaceman Trim progress outside the current boot cycle. In the event of a timeout, there can be no resumption of interrupted Trim progress. So we can assume that Spaceman issues discards for all free space, which goes as far as it can under the time limit. And this interpretation fits the reported behavior perfectly.

The outcome is that trimming on such SSDs will be non-functional soon after installation, resulting in additional wear on the flash.

Here, do not read "non-functional" as that the drive is rendered inoperative. Read it as once Trim cycles cause boot to take minutes, the system is effectively non-functional because re-booting hacks is routine to troubleshooting and minutes-long reboots are impractical.

One way to workaround the problem is to increase the timeout to an extremely high value, which at the cost of slow boot times (extra minutes) will ensure that all the blocks are trimmed. Setting this option to a high value, such as 4294967295 ensures that all blocks are trimmed.

At this point the patch is creating problems as much as it's working around them: DSM commands are issued for all free space, but why is this good? What is the "problem" exactly?

This next comment I find especially interesting:

Alternatively, use over-provisioning, if supported, or create a dedicated unmapped partition where the reserve blocks can be found by the controller.

The "found by the controller" simply makes no sense. The controller doesn't have awareness of the volume structure. That would be a compatibility nightmare. I discuss why below.

Conversely, the trim operation can be mostly disabled by setting a very low timeout value, while 0 entirely disables it. Refer to this article for details...

Можно ли эффективно использовать SSD без поддержки TRIM?

hxxps://interface31.ru/tech_it/2015/04/mozhno-li-effektivno-ispolzovat-ssd-bez-podderzhki-trim.html

A little detour here... The article is in Russian, so here's the translation:

Can an SSD be used effectively without TRIM support? | Notes of an IT specialist

Вынесенный нами в заголовок вопрос довольно часто занимает умы системных администраторов. Действительно, что лучше, собрать из твердотельных дисков RAID массив, но потерять поддержку TRIM, или отказаться от отказоустойчивости в пользу высокой производительности? Ситуация усугубляется еще и тем...

interface31-ru.translate.goog

It's a decent article. Please read it. Tldr Answer:

Yes! Very much so.

Note: The failsafe value -1 indicates that this patch will not be applied, such that apfs.kext will remain untouched.

Note 2: On macOS 12.0 and above, it is no longer possible to specify trim timeout. However, trim can be disabled by setting 0.

Note 3: Trim operations are only affected at booting phase when the startup volume is mounted. Either specifying timeout, or completely disabling trim with 0, will not affect normal macOS running.

As it mentioned in the OC documentation. Since Monterey, Only the value of zero (0) and -1 are respected.

My experience with Samsung 980 Pro, as of macOS 12 and 13, is that a timeout value of 0 is not reliable to disable discards:

—Spaceman may discard even if a timeout of 0 is supplied.

—If Spaceman is not allowed to complete, it will be run the next boot.

—If Spaceman is allowed to complete, it may not attempt discard again until some unknown threshold event. There is some trigger condition which will cause a Spaceman discard pass over all free space in the volume irrespective of the timeout. I believe trigger is related to system volume snapshot state changes. This means full discard pass(es) are run during macOS update installation. When the discard pass runs, the time required depends primarily on the amount of volume free space.

On the 980 Pro, the discard DSM operations count against wear, according to Samsung Magician.

MY PERSONAL OBSERVATIONS ON THE HISTORY OF TRIM (DSM, UNMAP)

The essence of Trim is the assumption that it is helpful to drive performance for the host to inform the drive of LBA allocation status. The root of this assumption are following facts about flash storage devices:

—The drive presents its store as an LBAs (logical block addresses, 0 to N-1) blocks for the advertised extent of the drive. Drive commands are issued for ranges of LBAs.

—The drive must handle LBA writes at a flash-block level, where flash blocks are large multiples (10s or more) of LBA blocks. Internally, the controler translates LBAs into page and cell addresses according to structure of flash and whatever proprietary juju the controller implements.

—In order to write to an LBA block, the portion of the flash block to be updated must be in a fresh state.

—When fresh, the write request for an LBA-block-sized area (which can be orders of magnitude smaller than the affected flash block) can be written just once, after which it must be refreshed. Other fresh regions of the same flash block can be written, at any time.

—Refreshing written blocks can only be done by refreshing the entire flash block which contains them. So if any LBA block within a flash black is to be rewritten, the entire flash block must first be refreshed.

—Flash blocks tolerate a limited number of refresh cycles (around 1000) after which the block becomes unreliable.

—These features of flash storage leads to a performance effect widely termed

write-amplification: small writes from the host may produce orders of magnitude larger effects internal to the drive, affecting throughput and wear. A drive-internal control control stratum called the "flash translation layer (FTL)" determines how LBAs are mapped onto flash blocks. Using various levels of caching and allocation, the controller manages the wear and performance implications of write-amplification.

The history of Trim is with Compaq and Microsoft Windows. Flash was introduced in the mid 80s and SSDs used very simple controllers, basically directly exposing the flash structure to the storage driver. Microsoft extended the ATA commandset to add Trim, which allowed the driver to supply allocation hints to the drive.

Overview of ATA (Wikipedia)

en.m.wikipedia.org

Compact flash was introduced in 1986, at the same time the 80386, which is the birth-point of the modern PC.

The design of flash memory and accommodations for Trim predates generations of PC storage interface design, but at the time Microsoft was adding ATA Trim, it was a common design approach to place complex device mgmt cores in SW to gain value from the PC's most-expensive component, the CPU. As a case in point, in the 90s, end-user cost of PC telephone modems was reduced by putting the modulator/demodulator function in a device driver, rather than include a dedicated component in the modem.

See

Softmodem / Winmodem

en.wikipedia.org

In a similar vein but occurring a decade later than Softmodem, early SSDs had simple controllers where the ATA spec relied on programmed-I/O (host CPU instructions moving data to/from the device and testing status), so the CPU was always directly involved in IO.

In the evolution of PC, there was a time when ATA direct host memory access by devices (DMA) was a significant, expensive advance. In the long run this cost was overcome by the enormous increases in logic density per unit cost of dedicated controllers. Today a $50 NVMe drive relies on a dedicated multi-core controller CPU hundreds of times more powerful than an 1980s IBM PC AT.

Trim in Computing (Wikipedia)

en.wikipedia.org

A trim command (known as TRIM in the ATA command set, and UNMAP in the SCSI command set) allows an operating system to inform a solid-state drive (SSD) which blocks of data are no longer considered to be 'in use' and therefore can be erased internally.Trim was introduced soon after SSDs were introduced. Because low-level operation of SSDs differs significantly from hard drives, the typical way in which operating systems handle operations like deletes and formats resulted in unanticipated progressive performance degradation of write operations on SSDs.

While the awareness by the host of deeply internal device mgmt details is as old as computing, the addition of ATA Trim came relatively late in the modular evolution of PC, first appearing in Windows 2007.

Windows 7 initially supported TRIM only for drives in the AT Attachment family including Parallel ATA and Serial ATA, and did not support this command for any other devices including Storport PCI-Express SSDs even if the device itself would accept the command.[41] It is confirmed that with native Microsoft drivers the TRIM command works on Windows 7 in AHCI and legacy IDE / ATA Mode.

The idea of Trim has always been to give the drive hints (or early notice) to prepare memory for reuse.

MORE THOUGHTS ON DSM

To be very clear, implementation of Trim-related commands has always been optional! The specs have always said so.

The only reliable way for a drive to know the disposition of its host LBA space is for the host to tell it. And as a case in point, we have macOS APFS Spaceman, which is system code pertaining to APFS structures.

But the host doesn't have to issue discards per se in order to information the drive.

Even at the highest level of analysis, Trim commands work in several modes:

— Synchronous vs asynchronous: Does the OS storage manager have to wait for DSM to complete?

— NVMe and later-ATA-spec TRIM allows requests that affected extents be placed in a specific state, e.g. zeroed, vs indeterminate. There's an idea of "secure erase," etc.

— Discard works on LBA blocks, flash is reset by multi-block pages. What happens to discard of unaligned extents?

— What is the effect of discard on device command-queue? If discard is intermixed with other commands, what are the dependencies? Must the queue be flushed?

— If discard doesn't request a specific result state, is it OK for the drive to do nothing? e.g. interplay with secure erase functionality. IOW, can implementation options be partial?

We find many concerns which are complex and invite peculiar edge cases

All these concerns leads to a conundrum the basic value proposition of Trim:

THE DEBACLE OF MODERN SSD TRIM

If the host is going to pitch-in on the chore of flash readiness,

when is a good time for the OS to tell the drive to prepare? Boot time? During mainline system operation? Never?

What happens to system performance if deleting files must generate synchronous discards (effectively writes) for the deleted file's related storage extents? Normally file deletion is accomplished by updating tiny amount of meta-data, to forget the associated storage extents.

What if discards blow up the drive command queue?

What about race conditions between discards that imply a specific storage state and command syncrony? Etc...

If the OS is going to be involved in flash storage maintenance, there are more or less advantageous times for it to conduct this chore depending on workloads, which is why the OS tends to relegate Trim to a maintain point, e.g., boot.

A survey of system admin literature reveals various approaches. Different OSes have their own ways. But this is most clearly seen in the Linux command structures which divide the chore of discard between volume mount options and an administrative command called fstrim(8). See Linux references at end.

Moreover, the ATA/NVMe specs keep evolving, the drive controllers keep getting more sophisticated, and different drives may treat DSM options differently.

Can we generalize a posture towards the administration of discard?

I think so. The progress of system design is rooted in the truism that good design modularizes concerns of subsystems to hide details in order to manage complexity; so don't expose the complexity of one subsystem to another without a clear theory of dependences that justifies the exposure. When a design gets modularity wrong, it becomes awkward and confusing.

SSD Trim being a case in point. But any measure, flash readiness for I/O must be regarded as a purely internal concern of a drive. There was a time when making the host aware of flash mgmt was appropriate. This was during the early development of SSDs, when it was impractical to use a dedicated controller and FTL was very simple.

Thanks to powerful dedicated chips we now find increasing modularity across every aspect of computer systems design. But this doesn't imply seamless interoperation. Modularity may manifest in surprising ways, such as Apple sealing its devices and spreading functionality across components that are considered separate modules in the PC market. There is no contradiction: Apple's closed designs give them the power to add value through vertical integration because they hide subsystem dependencies that might be visible and therefore inappropriate for PC parts.

That SSD Trim has become a point of friction for systems administration is in keeping with the poor design cut that is moderm Trim. In the NVM Express Commandset Spec, we find that the DSM sub-commandset allows the host to signal the drive with a wide range of hints for optimizing access, including caching hints. Again these are purely advisory, with the drive maker free to no implement and meet spec. But Apple may have other thoughts which result in foibles for hackintoshers.

Yet, even if Apple is a perfect student of PC modularoty, with no internal agenda, we should expect that market pressures will result in many drive vendors creating partial DSM implementations with awkward side-effects or failure modes. And finally, even if the implementations were somehow perfect, drive vendors tailor capabilities and behavior to market segments. So the permutations are a nightmare.

If there's any single conclusion that a user might draw from all this today, I think is "avoid discard."

Now I'll explain how a system can avoid the concern of discard.

THE MAGIC OF OVER PROVISIONING

First, I want to expose a common misunderstanding aver-provisioning:

There's an idea among Windows and Linux nerds that an SSD can be usefully over-provisioned be leaving "free-space" via the partition table (e.g., GPT layout). As mentioned in the OC documentation above, there's this idea of "leaving space where the controller can find it," without any sense of wonder over how exactly the drive is informed of this "free" space. How does the drive get information about volume layout if the OS doesn't tell it? It's possible the drive could know how to read partition layout: NOT!. The drive maker will have to maintain compatibility with system SW, but we find no traits of OS per drive filesystem compatibility listed by drive makers.

So how does this work? If just having space on a drive which is rarely touched helps the controller, there's almost always space that's rarely touched, unless the drive is full. So, from the drive perspective, what's special about about one area that goes untouched because it's outside any volume, versus area that just hasn't been touched yet? There isn't any obvious distinction.

For routine PC uses, a full drive and the need for high performance are mutually exclusive: if the drive is full, then write performance and wear are a minimal concern, storage is exhausted. Running a drive past full seems like a degenerate case. User-selectable headroom, might make sense for use-case where a drive is kept close to full with a very dynamic workload, which is not typical of consumer devices.

On Windows, some drives come with optional utilities that include "over-provisioning" controls. These controls can have awareness of Windows storage layouts. In such case, the utility might use this information to program the drive controller with proprietary layout specific parameters, e.g., the Magician utility can tell the drive certain area is headroom for performance using secret attributes in drive controller. Bit what does this have to do with OpenCore hackintosh, which has no such utility?

As far as I'm concerned, SSD over-provisioning secret sauce is at best academic and mostly irrelevant.

IOW, the OS is always supplying the drive the needed information for garbage collection through normal operation. Even without discard or explicit over-provisioning, the drive constantly receives information about flash reclamation from ordinary I/O: a write implies that the previously associated flash for its LBA extent is reclaimable.

There might be a scheduling advantage to Trim, in that signaling the opportunity for reclamation ahead of writes may allow hiding of latency in flash page setup. But a similar advantage can by arranged from pipelining writes and flash maintenance: when processing writes, the controller FTL sends the data for the requested LBAs to prepped flash pages, and notes the previously associated pages can be refreshed. Writes aren't delayed by flash maintenance latency which happens in the background. A slight over-provision in the drive (which can be hidden-from the user) ensures there's always free flash to ready to receive writes even when the drive is nearly full.

My conclusion is that discard and provisioning also should be a purely internal concerns for the drive logic, where drive traits may be marketed in manner stylized and tweaked for some general purposes, much as spinning hard drives are marketed in 2023.

SOME OPENCORE HISTORY ASSOCIATED WITH SSD INCOMPATIBILITY

Here is a selection of links which capture the history of SSD compatibility with Open Core:

Dortania — Anti-Hackintosh Buyers Guide — Storage

Last updated 3/1/2023

Storage is a section that can be quite confusing as there a lot of mixed reports regarding PCIe/NVMe based devices, many of these reports are based off old information from back when PCIe/NVMe drives were not natively supported, like block size mattering or require kexts/.efi drivers.

Well, High Sierra brought native support for these types of drives but certain ones still do not work and can cause instability if not removed/blocked out at an ACPI level.

The other big issue surrounds all Samsung NVMe drives, specifically that they're known to slow down macOS, not play well with TRIM and even create instability at times. This is due to the Phoenix controller found on Samsung drives that macOS isn't too fond of, much preferring the Phison controller found in Sabrent Rocket drives and Western Digital's in-house controllers(WD SN750). The easiest way to see this is with boot up, most systems running Samsung drives will have extra long boot times and have their drives run hotter due to the software TRIM failing(hardware TRIM still should be enabled but no partiality). Also some older Intel drives and Kingston NVMe drives also experience these issues.

And while not an issue anymore, do note that all of Apple's PCIe drives are 4K sector-based so for best support only choose drives with such sectors.

Note for laptop users: Intel SSDs don't always play nicely with laptops and can cause issues, avoid when possible

SSD/Storage Options that are NOT supported:

Samsung PM981 and PM991 (commonly found in OEM systems like laptops) — Even if PM981 has been fixed with NVMeFix ersion 1.0.2 there is still plenty of kernel panics issues

Micron 2200S — Many users have report boot issues with this drive

SK Hynix PC711 — The proprietary Hynix NVMe controller on this drive is not supported at all, and it will not boot with macOS.

It's difficult to make sense of all these reports because of edge cases and lack of comprehensive study.

I use a SK Hynix Platinum P41 on an Asus Z590 Hero with 11th gen and Monetery through Ventura 13.3 and it's 100% perfectly reliable and fastest our of 4 drives I have used, including 980 Pro.

...SSDs to avoid:

Samsung 970 Evo Plus (While not natively supported out of the box, a firmware update from Samsung will allow these drives to operate in macOS)

For all NVMe SSDs, its recommended to use NVMeFix.kext to fix power and energy consumption on these drives.

SSD hall of fame needs new entries #192

Guide Anti-Hackintosh Buyers Guide (link) After our discovery of a severe bug in the TRIM implementation of practically all Samsung SSDs we spent time investigating which SSDs are affected by all k...

github.com

(Apr 9, 2021) After our discovery of a severe bug [refers to the SetApfsTrimTimeout patch] in the TRIM implementation of practically all Samsung SSDs we spent time investigating which SSDs are affected by all kinds of issues, and so far came with several names worth mentioning.

Working with TRIM broken (can be used with TRIM disabled [My personal experience with 980 Pro contrdicts this claim] at slower boot times, or as a data storage):

Samsung 950 Pro

Samsung 960 Evo/Pro

Samsung 970 Evo/Pro

Working fine with TRIM:

Western Digital Blue SN550

Western Digital Black SN700

Western Digital Black SN720

Western Digital Black SN750 (aka SanDisk Extreme PRO)

Western Digital Black SN850

Intel 760p (including OEM models, e.g. SSDPEMKF512G8)

Crucial P1 1TB NVME (SM2263EN) (need more tests)

Working fine with TRIM (SATA):

SATA PLEXTOR M5Pro

SATA Samsung 850 PRO (need more tests)

SATA Samsung 870 EVO (need more tests)

Working fine with TRIM (Unbranded SSDs):

KingDian S280

Kingchuxing 512GB

Incompatible with IONVMeFamily (die under heavy load):

GIGABYTE 512 GB M.2 PCIe SSD (e.g. GP-GSM2NE8512GNTD) (need more tests)

ADATA Swordfish 2 TB M.2-2280

SK Hynix HFS001TD9TNG-L5B0B

SK Hynix P31

Samsung PM981 models

Micron 2200V MTFDHBA512TCK

Asgard AN3+ (STAR1000P)

Netac NVME SSD 480

...cont.

Posted by osy (Dec 24, 2022)

My machine is a Intel NUC i7-8809G running macOS 12.6.1 with FileVault enabled.

1. Samsung 860 EVO M.2 SATA 1TB This was the drive I originally had and it worked fine for years. TRIM was enabled.

2. HP EX920 M.2 NVME 1TB Two year ago, I bought this with the intention of replacing the SATA drive but I had random panics. Probably due to high loads. Didn't do too much testing so I didn't know what the issue was or what the TRIM status was.

3. Mushkin Enhanced Helix-L M.2 NVME 1TB I bought this a year ago again with the intension of replacing the SATA drive. Also had random panics and actually managed to kill the drive probably do to extreme IO loads. Returned it a week later. Do not ever buy this brand.

4. Samsung 980 PRO w/ Heatsink M.2 NVME 2TB I bought this before reading the first post. Had slow boot times that were NOT solved by SetApfsTrimTimeout. In fact, I was not able to boot my primary partition (FileVault enabled) at all. It just got stuck at the loading screen for maybe half an hour before rebooting with a panic. I can boot into my second install (unencrypted) but it would quickly panic when under load.

5. Kingston NV2 M.2 NVME 2TB I bought this last week because there was a TechPowerUp review that gave a really good score and said the NV2 had a Phison controller. Turns out, after reading some Amazon reviews, either Kingston sent reviewers a different sample or the 1TB model uses a different controller. The 2TB version has a Silicon Motion controller and panics on high loads.

6. Western Digital Black SN850X M.2 NVME 2TB This worked perfectly (two weeks so far without any panics). This was expected from the first post, but I can confirm the X model didn't change anything drastically.

I think having FileVault enabled really exacerbates the issue. With any NVME that panics with a heavy IO load, it will also instantly panic when booting into my FileVault enabled installation.

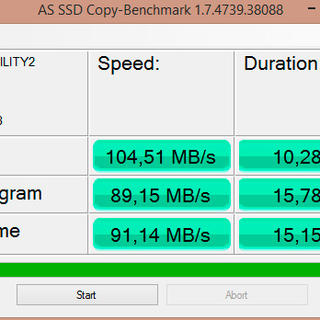

I don't see enough people talk about HMB and DRAMless SSDs which are the majority of "cheap" NVMEs. macOS does not support HMB and so will get worse random I/O throughput. The performance on sequential reads shouldn't be affected and that's what everyone seems to run on benchmarks. I found that "low throughput" seems to be one of the factors that cause macOS to panic. For example, here is a benchmark run on the Kingston NV2 on macOS... [Continued at abive link]

Acidanthera NVMeFix.kext

Contribute to acidanthera/NVMeFix development by creating an account on GitHub.

github.com

NVMeFix is a set of patches for the Apple NVMe storage driver, IONVMeFamily. Its goal is to improve compatibility with non-Apple SSDs. It may be used both on Apple and non-Apple computers.

The following features are implemented:

* Autonomous Power State Transition to reduce idle power consumption of the controller.

* Host-driver active power state management.

* Workaround for timeout panics on certain controllers (VMware, Samsung PM981).

Other incompatibilities with third-party SSDs may be addressed provided enough information is submitted to our bugtracker.

Unfortunately, some issues cannot be fixed purely by a kernel-side driver. For example, MacBookPro 11,1 EFI includes an old version of NVMHCI DXE driver that causes a hang when resuming from hibernaton with full disk encryption on.

LINUX COMMANDS FOR TRIM ADMINSTRATION

nvme-dsm (Ubuntu) man page

manpages.ubuntu.com

fstrim(8) man page

Running fstrim frequently, or even using mount -o discard, might negatively affect the lifetime of poor-quality SSD devices. For most desktop and server systems a sufficient trimming frequency is once a week. Note that not all devices support a queued trim, so each trim command incurs a performance penalty on whatever else might be trying to use the disk at the time.

Linux fstab(8)

discard — If set, causes discard/TRIM commands to be issued to the

block device when blocks are freed. This is useful for SSD

devices and sparse/thinly-provisioned LUNs.

FURTHER READING

500 SSDs Listed by Brand, Model, Interface, Form Factor, Capacities, Controller, Cores, DRAM, HMB, NAND Brand, NAND Type, Layers, Categories, Notes, Product Page

docs.google.com

The Ultimate Guide to Apple’s Proprietary SSDs

(Higher level presentation that I would wish, but still a nice review of Mac-specific SSD tech.)

www.tonymacx86.com

www.tonymacx86.com