Hi, i running an

RX 560 - much slower than an RX 580.

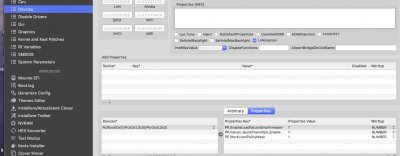

BUT when i use those Clover properties (Screenshoot) - i also get about

30% boost in Metal and! OpenCL Geekbench 5.

Beside that, as some others reported, i cant see any boost in other OpenCL or Metal Benches. Sure the benches also OpenCL may use other code so some difference in the boost %. But for example Luxmark - also OpenCL - or basemark (Metal Game Bench,

https://www.basemark.com/benchmarks/basemark-gpu/) the boost is really near 0%, zero. I dont want to say it has no generell effect (some speak about higher bandwith of VRAM) but for me its unclear in the boost only happens in very special conditions or even only in geekbench.

Feel free to use my

AMD GPU Menu tool to check perhaps gpu/vram clocking for power management working, which displays lot of

RX 4xx/5xx properties like GPU/VRAM clk MHz, temp, watt etc. - plus it defects and shows AMD

HW dec/enc in use. Normally you cant check that, because even HW dec /Enc is in action, the gpu load is very low. Screenshoot shows HW Enc is in action = Yes, even Videoproc shows wrong Enc informations

View attachment 468761

View attachment 468762

View attachment 468761

View attachment 468762

PS: My AMD Menue tool doesn't need any sensor kexts! It get the values direct from the AMD driver. THats also the reason why the tool

only works with RX 4xx/5xx cards and

not on Vega, VII,.... the driver didnt have those those values.