- Joined

- Nov 17, 2020

- Messages

- 74

- Motherboard

- Gigabyte Z490I Aorus Ultra

- CPU

- i9-10900K

- Graphics

- RX 5500 XT

- Mac

- Mobile Phone

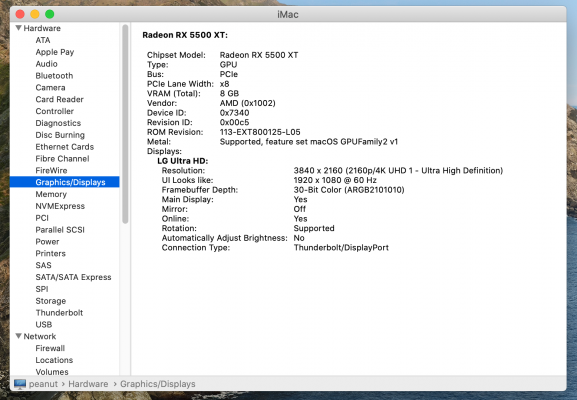

I'm having an issue getting my display to output higher than 1080p. I can adjust the scaling on the display through system prefs to achieve a higher resolution, but I feel like I shouldn't have to do that... Maybe? I've got a 5k iMac that, by default, outputs 2560x1440, so I would expect a similar behavior from the 4k screen. Maybe it wouldn't be cranked all the way up to 4k, but I would expect better than 1080 for sure.

For what it's worth, if I do adjust the screen size to a "Scaled" option, I can get to something that looks more like what you would expect it to look like "out of the box," but the login screen still renders at 1080, and presumably also would any other user accounts that are created since I'm just adjusting my personal preference by changing the display option in the system prefs.

I'm running Opencore (whatever the newest is, I updated it last night), verified my config.plist file using the sanity checker, and verified that NVRAM -> Add -> 4D1EDE05-38C7-4A6A-9CC6-4BCCA8B38C14 is `02`in addition to the other things listed here, with the exception of setting AppleDebug to false (I'm still debugging, after all).

I know this has got to be something dumb that I'm overlooking because I'm new to this, so I'm looking forward to someone pointing out the stupid thing I forgot to do.

Video card: https://www.newegg.com/asrock-radeo...gd-8go/p/N82E16814930027?Item=N82E16814930027

For what it's worth, if I do adjust the screen size to a "Scaled" option, I can get to something that looks more like what you would expect it to look like "out of the box," but the login screen still renders at 1080, and presumably also would any other user accounts that are created since I'm just adjusting my personal preference by changing the display option in the system prefs.

I'm running Opencore (whatever the newest is, I updated it last night), verified my config.plist file using the sanity checker, and verified that NVRAM -> Add -> 4D1EDE05-38C7-4A6A-9CC6-4BCCA8B38C14 is `02`in addition to the other things listed here, with the exception of setting AppleDebug to false (I'm still debugging, after all).

I know this has got to be something dumb that I'm overlooking because I'm new to this, so I'm looking forward to someone pointing out the stupid thing I forgot to do.

Video card: https://www.newegg.com/asrock-radeo...gd-8go/p/N82E16814930027?Item=N82E16814930027