- Joined

- Mar 29, 2011

- Messages

- 844

- Motherboard

- ASRock X99 Extreme6

- CPU

- E5-2690 v4

- Graphics

- Radeon VII

Recently I got a Supermicro AOC-SLG3-2M2 dual M.2 card. You can use two x4 M.2 NVMe drives in this card, and it's inexpensive, but the PCI-e slot has to support bifurcation. Well, how to do this depends on your chipset. The newest ones can handle it automatically. It's not so simple on most X99 boards. I followed this guide on modifying the UEFI variables for IIO configuration to bifurcate my bottom PCI-e slot: https://www.win-raid.com/t3323f16-Guide-How-to-Bifurcate-a-PCI-E-slot.html

I found that the offset the author found was the same for me, 0x539, and that I also only had to change that one value to the x4x4 value. I used the same UEFI shell USB that I already have for running the bcfg boot commands. It was able to run RU, where I opened UEFI variables with Alt-= and then made the changes in IntelSetup as described.

My system runs Mojave, by the way. High Sierra or Catalina could also make sense for doing this. I don't know if there are differences, though.

So with both SSDs recognized, I ran Disk Utility and started RAID Assistant. I selected the two for an APFS RAID 0 volume and created it. At this point I had some trouble cloning to the new volume with Disk Utility (big surprise), and so what I recommend is to use the command line:

Where N is the number for the APFS container of the running Mojave system. And M is the number for the APFS container that was just created in Disk Utility. It is not the number of either of the source disks in the RAID set!

There was a minor error message at the very end, as I recall, that I ignored. At this point, I also copied over my EFI folder to one of the SSDs in the RAID set--each get created with empty EFI partitions--and I set up my BIOS to boot from there.

There's one last trick, because at this point the boot-up is stuck in a reboot loop, involving the caches rebuild. I found the solution to it here: https://forums.macrumors.com/thread...-a-howto.2125096/?post=26636374#post-26636374

You just have to boot into single user mode, remount root read/write, and delete the file /usr/standalone/bootcaches.plist

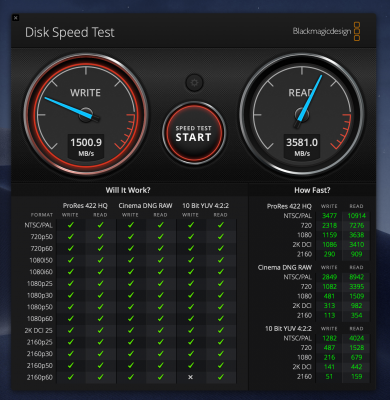

It's feeling pretty snappy. The benchmark result is crazy fast!

I found that the offset the author found was the same for me, 0x539, and that I also only had to change that one value to the x4x4 value. I used the same UEFI shell USB that I already have for running the bcfg boot commands. It was able to run RU, where I opened UEFI variables with Alt-= and then made the changes in IntelSetup as described.

My system runs Mojave, by the way. High Sierra or Catalina could also make sense for doing this. I don't know if there are differences, though.

So with both SSDs recognized, I ran Disk Utility and started RAID Assistant. I selected the two for an APFS RAID 0 volume and created it. At this point I had some trouble cloning to the new volume with Disk Utility (big surprise), and so what I recommend is to use the command line:

Code:

sudo asr restore --source /dev/diskN --target /dev/diskM --eraseWhere N is the number for the APFS container of the running Mojave system. And M is the number for the APFS container that was just created in Disk Utility. It is not the number of either of the source disks in the RAID set!

There was a minor error message at the very end, as I recall, that I ignored. At this point, I also copied over my EFI folder to one of the SSDs in the RAID set--each get created with empty EFI partitions--and I set up my BIOS to boot from there.

There's one last trick, because at this point the boot-up is stuck in a reboot loop, involving the caches rebuild. I found the solution to it here: https://forums.macrumors.com/thread...-a-howto.2125096/?post=26636374#post-26636374

You just have to boot into single user mode, remount root read/write, and delete the file /usr/standalone/bootcaches.plist

It's feeling pretty snappy. The benchmark result is crazy fast!