You pushed me to interesting idea.

What if chipset cannot find right gpu ssdt tables because of unknown gpu and injects wrong ssdt.

So what I have done:

Using F4 in clover, I drop oem tables with different configurations: Intel+Amd, Amd only, Intel+Amd+Nvidia egpu, Amd+nvidia egpu.

Prompt: laptop has two ssdt configs. The ATIGFX, NVIDIAGF - for gpu which is main and the AMDSGTBL, NVSGTBL for internal gpu.

“SGTBL” can be a suffix and abbreviation of “secondary graphic T backlight”

The next step was to extract gpu ssdt from G4 rom. As all ssdt and dsdt is aml files in rom. 10 years ago I modded Asus p5ql-pro bios tables with patched for hackintosh and it works fully like real Mac. But after 10 years I forget everything about acpi patching. So back into topic))

G4 GPUs sdt tables are really different. I will later find out what the difference.

Back to the beginning:

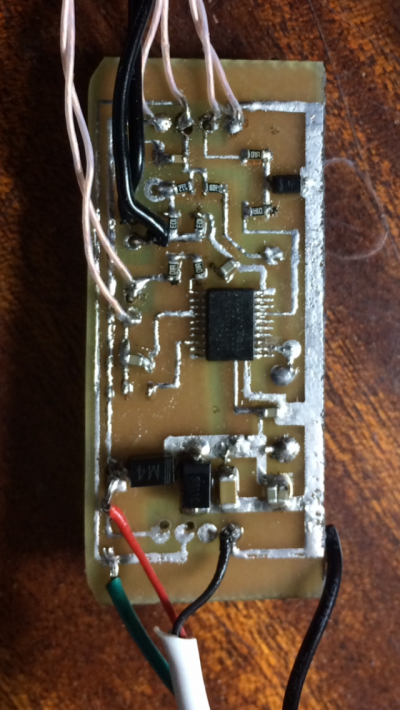

In the image I show different hardware configs and how the chipset inject it (I hope that clover is not selecting sdt tables, otherwise it will be really hard to say bootloader how to select proper ssdts)

View attachment 516686

So when egpu connected, it won’t work, because:

- when Intel in Hybrid, Amd as secondary (AMDSGTBL), egpu is also secondary (NVSGTBL). But should be NVIDIAGF.

- when Discrete only + egpu, the two GPUs are main. But screens are black because two of them is GFX0@0, so os recognizes them as one gpu. The ssdt configs are ATIGFX, NVIDIAGF.

So to make egpu working I need to force inject NVIDIAGF and patch (I don’t know how and where) pci or acpi path to make it GFX0@1, or GFX1@0

Upd 1: After unknown steps, egpu load os but the last problem is the nvidia doesn’t get any image. Intel is working on nvidia ports, because of switchable dsdt applied which I can’t force reinject or replace with standalone dsdt