You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

[Solved]Fixes to Get Full Acceleration on Intel HD Graphics in iMac 17.1

- Status

- Not open for further replies.

- Joined

- May 13, 2016

- Messages

- 60

- Motherboard

- GIGABYTE GA-Z170-D3H-F22f

- CPU

- i5-6600

- Graphics

- HD 530

- Mac

- Mobile Phone

Hey everyone !

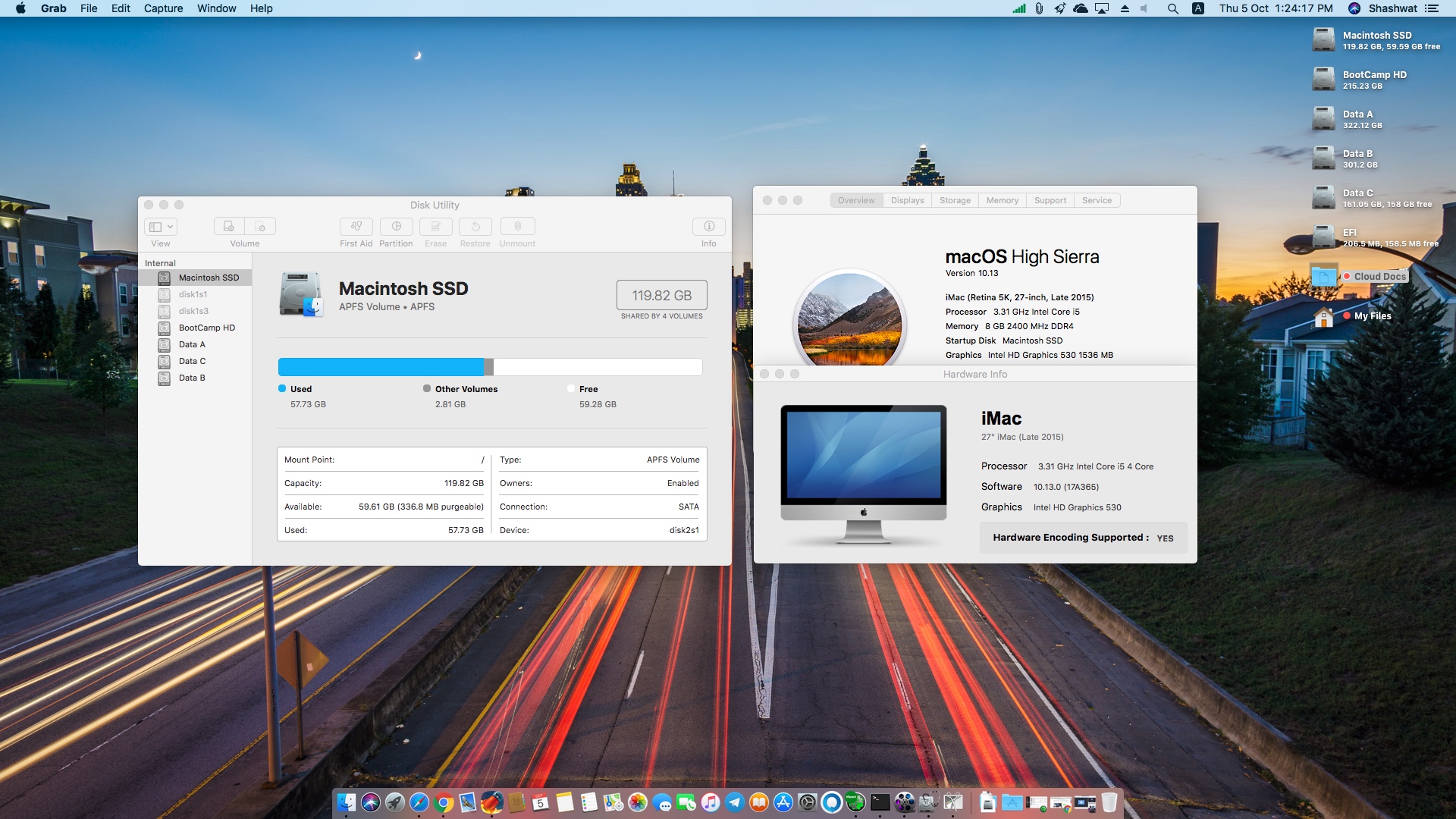

I successfully updated to High Sierra using this guide

https://www.tonymacx86.com/threads/update-directly-to-macos-high-sierra.232707/

Follow all steps in the above guide carefully.

I now have perfectly working HW Acceleration and everything is working fine except Audio ALC 1150 (please help me if you know the fix), which I'm figuring out.

Plus, I updated my SSD to APFS in the process.

To get acceleration please update Lilu.kext, Shiki.kext, HiberationFixup (if required) and IntelGraphicsFixup.kext to latest. Install these with KextBeast before performing HS update.

If you have enabled TRIM on your SSD then you might experience slower boot times due to a still unknown issue. Therefore disable TRIM for the time being and boot times will be normal as before.

If anyone needs any help I'll be happy to do so.

Thanks

I successfully updated to High Sierra using this guide

https://www.tonymacx86.com/threads/update-directly-to-macos-high-sierra.232707/

Follow all steps in the above guide carefully.

I now have perfectly working HW Acceleration and everything is working fine except Audio ALC 1150 (please help me if you know the fix), which I'm figuring out.

Plus, I updated my SSD to APFS in the process.

To get acceleration please update Lilu.kext, Shiki.kext, HiberationFixup (if required) and IntelGraphicsFixup.kext to latest. Install these with KextBeast before performing HS update.

If you have enabled TRIM on your SSD then you might experience slower boot times due to a still unknown issue. Therefore disable TRIM for the time being and boot times will be normal as before.

If anyone needs any help I'll be happy to do so.

Thanks

- Joined

- May 13, 2016

- Messages

- 60

- Motherboard

- GIGABYTE GA-Z170-D3H-F22f

- CPU

- i5-6600

- Graphics

- HD 530

- Mac

- Mobile Phone

Hi I have a Acceleration on High Sierra and QuickSync

1. Install High Sierra and

2. Install Clover or update to the last version and with Multibeast 9.2.1 use the HD 530 patch, iMac 14,2 or 17,1 Definition (and others patch for your system)

3.Install on /System/Library/Extensions (maybe works in EFI partition EFI/CLOVER/kexts/10.13) the next Kexts:

View attachment 282148 View attachment 282149

4. With the EFI partition mounted, edit your config.plist with Clover Configuration and use the nexts patches in the pictures:

View attachment 282115 View attachment 282116 View attachment 282117

5. Restart the system and you must have Full Graphics Acceleration and for the sound use de Toleda's ''audio_cloverALC-130_v0.3'' patch (and HDMI patch).

View attachment 282111

I hope you help!

Please elaborate the use of View attachment 282115

Last edited:

- Joined

- May 22, 2011

- Messages

- 128

- Motherboard

- ASUS ROG MAXIMUS VIII GENE

- CPU

- i7 6700

- Graphics

- GTX 1070

- Mobile Phone

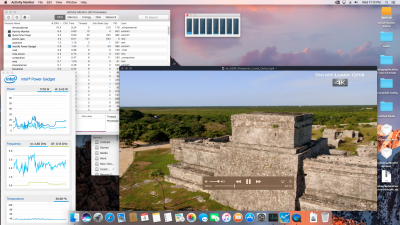

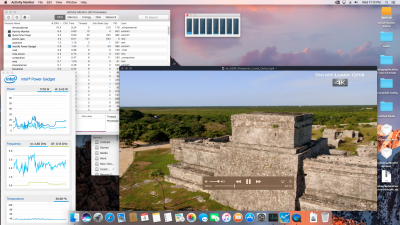

So the latest Lilu 1.2.0 and its Plugins- Shiki & NvidiaGraphicsFixup allow Skylake/Kabylake + Pascal GPU get almost full acceleration, I tested with High bitrate mp4 video in Quicktime & desktop screen recording via QT. Both resulted in low cpu usage and using intel iGPU for decode/encode. According to Lilu developer, I should be getting a successful VDAchecker message, but I get this:

You guys can check that too if you getting proper HW decoding outside Quicktime. I am attaching a zip file with the latest kext as these were only available in source code, so I compiled them. Do remember to add shikigva=4 (This bootflag is only for Skylake & newer Cpus) as custom boot flag in config.plist. Have Inject Intel=True and a connecter-less ig-platform id is used, for my HD 530, I had to use "0x19120001" and enabled it in BIOS as multi monitor with >=64mb DVMT preallocate memory.

Here are my results:

DECODING:

ENCODING:

Youtube in safari still shows VTDecoderXPCService, which I believe is used for CPU SW acceleration rather than HW. But usage is quite low there.

Code:

GVA info: Successfully connected to the Intel plugin, offline Gen9

AVDCreateGPUAccelerator: Error loading GPU renderer

VDADecoderCreate failed. err: -12473

An error was returned by the decoder layer. This may happen for example because of bitstream/data errors during a decode operation. This error may also be returned from VDADecoderCreate when hardware decoder resources are available on the system but currently in use by another process.

VDADecoderCreate failed. err: -12473You guys can check that too if you getting proper HW decoding outside Quicktime. I am attaching a zip file with the latest kext as these were only available in source code, so I compiled them. Do remember to add shikigva=4 (This bootflag is only for Skylake & newer Cpus) as custom boot flag in config.plist. Have Inject Intel=True and a connecter-less ig-platform id is used, for my HD 530, I had to use "0x19120001" and enabled it in BIOS as multi monitor with >=64mb DVMT preallocate memory.

Here are my results:

DECODING:

ENCODING:

Youtube in safari still shows VTDecoderXPCService, which I believe is used for CPU SW acceleration rather than HW. But usage is quite low there.

Attachments

Last edited:

pastrychef

Moderator

- Joined

- May 29, 2013

- Messages

- 19,458

- Motherboard

- Mac Studio - Mac13,1

- CPU

- M1 Max

- Graphics

- 32 Core

- Mac

- Classic Mac

- Mobile Phone

So the latest Lilu 1.2.0 and its Plugins- Shiki & NvidiaGraphicsFixup allow Skylake/Kabylake + Pascal GPU get almost full acceleration, I tested with High bitrate mp4 video in Quicktime & desktop screen recording via QT. Both resulted in low cpu usage and using intel iGPU for decode/encode. According to Lilu developer, I should be getting a successful VDAchecker message, but I get this:

Code:GVA info: Successfully connected to the Intel plugin, offline Gen9 AVDCreateGPUAccelerator: Error loading GPU renderer VDADecoderCreate failed. err: -12473 An error was returned by the decoder layer. This may happen for example because of bitstream/data errors during a decode operation. This error may also be returned from VDADecoderCreate when hardware decoder resources are available on the system but currently in use by another process. VDADecoderCreate failed. err: -12473

You guys can check that too if you getting proper HW decoding outside Quicktime. I am attaching a zip file with the latest kext as these were only available in source code, so I compiled them. Do remember to add shikigva=4 (This bootflag is only for Skylake & newer Cpus) as custom boot flag in config.plist. Have Inject Intel=True and a connecter-less ig-platform id is used, for my HD 530, I had to use "0x19120001" and enabled it in BIOS as multi monitor with >=64mb DVMT preallocate memory.

Here are my results:

DECODING:

View attachment 283551

ENCODING:

View attachment 283552

Youtube in safari still shows VTDecoderXPCService, which I believe is used for CPU SW acceleration rather than HW. But usage is quite low there.

Hi. Have you tried with High Sierra? I had it working with Sierra but I think the latest Supplemental Update broke it.

- Joined

- Jul 27, 2012

- Messages

- 10

- Motherboard

- ASUS H170 PRO GAMING

- CPU

- i5-6500

- Graphics

- GTX 1060

- Mac

- Mobile Phone

My High Sierra build without Supp. Update doesn't have QuickSync eitherHi. Have you tried with High Sierra? I had it working with Sierra but I think the latest Supplemental Update broke it.

- Joined

- May 22, 2011

- Messages

- 128

- Motherboard

- ASUS ROG MAXIMUS VIII GENE

- CPU

- i7 6700

- Graphics

- GTX 1070

- Mobile Phone

Hi. Have you tried with High Sierra? I had it working with Sierra but I think the latest Supplemental Update broke it.

@pastrychef No I haven't tried with High Sierra, I formated my dummy disk with HS, yesterday when I was about to test with HS, supplemental update came in (i don't have the previous HS USB right now) and nvidia drivers were still missing, once they update, I'll test it. But if you are in last week's HS build which has nvidia web drivers, do try out, it should work. Don't forget the boot argument.

My High Sierra build without Supp. Update doesn't have QuickSync either

Yeah before using the latest Lilu and its plugins, even I didn't have quicksync in HS.

Why are you using the connectorless ig platform? Do you have a discrete graphic card? If it is a Nvidia card it will not pass in the vdadecoder test. In the shiki github page they have a closed tread in this subject. I think that it could work in High Sierra since Nvidia fixes some problems with the drivers (NVWebDriverLibValFix.kext is no longer necessary). I think i will update my system after the Nvidia driver of the supplemental upgrade is released

Yes I am using a dGPU with no connection on Intel. This is why connector-less is necessary, as for such task even in iMac and macbooks with dGPUs, MacOS usually forwards decodes/encodes to Intel GPU for battery/power efficiency and yes even I was expecting the vdadecoder test to fail but, but according to the developer of Shiki, he too mentions with these new plugins and Lilu, it shouldn't show like that, you can find the response in the last few comments with my results HERE.

- Joined

- Jul 27, 2012

- Messages

- 10

- Motherboard

- ASUS H170 PRO GAMING

- CPU

- i5-6500

- Graphics

- GTX 1060

- Mac

- Mobile Phone

I am already using those kexts uploaded by you, using shikigva=4, using PEG as primary and enabled IGPU multi-monitor with DVMT=64MB (connector-less with 0x19120001) and renamed HECI to IMEI; GFX0 to IGPU; PEGP to GFX0. Still my VDADecoderChecker result appeared like this:@pastrychef No I haven't tried with High Sierra, I formated my dummy disk with HS, yesterday when I was about to test with HS, supplemental update came in (i don't have the previous HS USB right now) and nvidia drivers were still missing, once they update, I'll test it. But if you are in last week's HS build which has nvidia web drivers, do try out, it should work. Don't forget the boot argument.

Yeah before using the latest Lilu and its plugins, even I didn't have quicksync in HS.

Yes I am using a dGPU with no connection on Intel. This is why connector-less is necessary, as for such task even in iMac and macbooks with dGPUs, MacOS usually forwards decodes/encodes to Intel GPU for battery/power efficiency and yes even I was expecting the vdadecoder test to fail but, but according to the developer of Shiki, he too mentions with these new plugins and Lilu, it shouldn't show like that, you can find the response in the last few comments with my results HERE.

GVA info: Successfully connected to the Intel plugin, offline Gen9

AVDCreateGPUAccelerator: Error loading GPU renderer

VDADecoderCreate failed. err: -12473

I have a "Disable board-id check to prevent no signal (c) lvs1974, Pike R. Alpha, vit9696" clover patch, dont know whether its related.

- Joined

- Jul 27, 2012

- Messages

- 10

- Motherboard

- ASUS H170 PRO GAMING

- CPU

- i5-6500

- Graphics

- GTX 1060

- Mac

- Mobile Phone

After a while of testing, I found that no IOVARendererID is injected under PEG0@1 or GFX0@0. Any idea on how to solve this?I am already using those kexts uploaded by you, using shikigva=4, using PEG as primary and enabled IGPU multi-monitor with DVMT=64MB (connector-less with 0x19120001) and renamed HECI to IMEI; GFX0 to IGPU; PEGP to GFX0. Still my VDADecoderChecker result appeared like this:

GVA info: Successfully connected to the Intel plugin, offline Gen9

AVDCreateGPUAccelerator: Error loading GPU renderer

VDADecoderCreate failed. err: -12473

I have a "Disable board-id check to prevent no signal (c) lvs1974, Pike R. Alpha, vit9696" clover patch, dont know whether its related.

- Joined

- May 22, 2011

- Messages

- 128

- Motherboard

- ASUS ROG MAXIMUS VIII GENE

- CPU

- i7 6700

- Graphics

- GTX 1070

- Mobile Phone

After a while of testing, I found that no IOVARendererID is injected under PEG0@1 or GFX0@0. Any idea on how to solve this?

This was suppose to be injected by nvidiagraphicsfixup according to its changelog, can you compile a new kext from the source code and see? It’s available on sourceforge. Remember to precompile Lilu too.

- Status

- Not open for further replies.

Copyright © 2010 - 2024 tonymacx86 LLC